Is AI making developers faster or just worse? A CTO builds a complex platform from scratch to test the "Stability Tax," why "Vibe Coding" is dead, and how the developer’s role is shifting from bricklayer to site foreman.

As CTO, I see it as my responsibility to help guide teams in the effective, secure, and responsible deployment of AI tools. Throughout 2024/25 the narrative around AI assisted software development appears to shift from frenzied optimism to cautious realism and even pessimism.

To get a first hand understanding of the impact of AI on the software development lifecycle (SDLC), I decided to run an experiment. I wanted to try and write a reasonably complex system from scratch using AI. I didn’t want a "Hello World" or another “To Do” app; I wanted something realistic, something that could be used at scale like we'd build in the enterprise world.

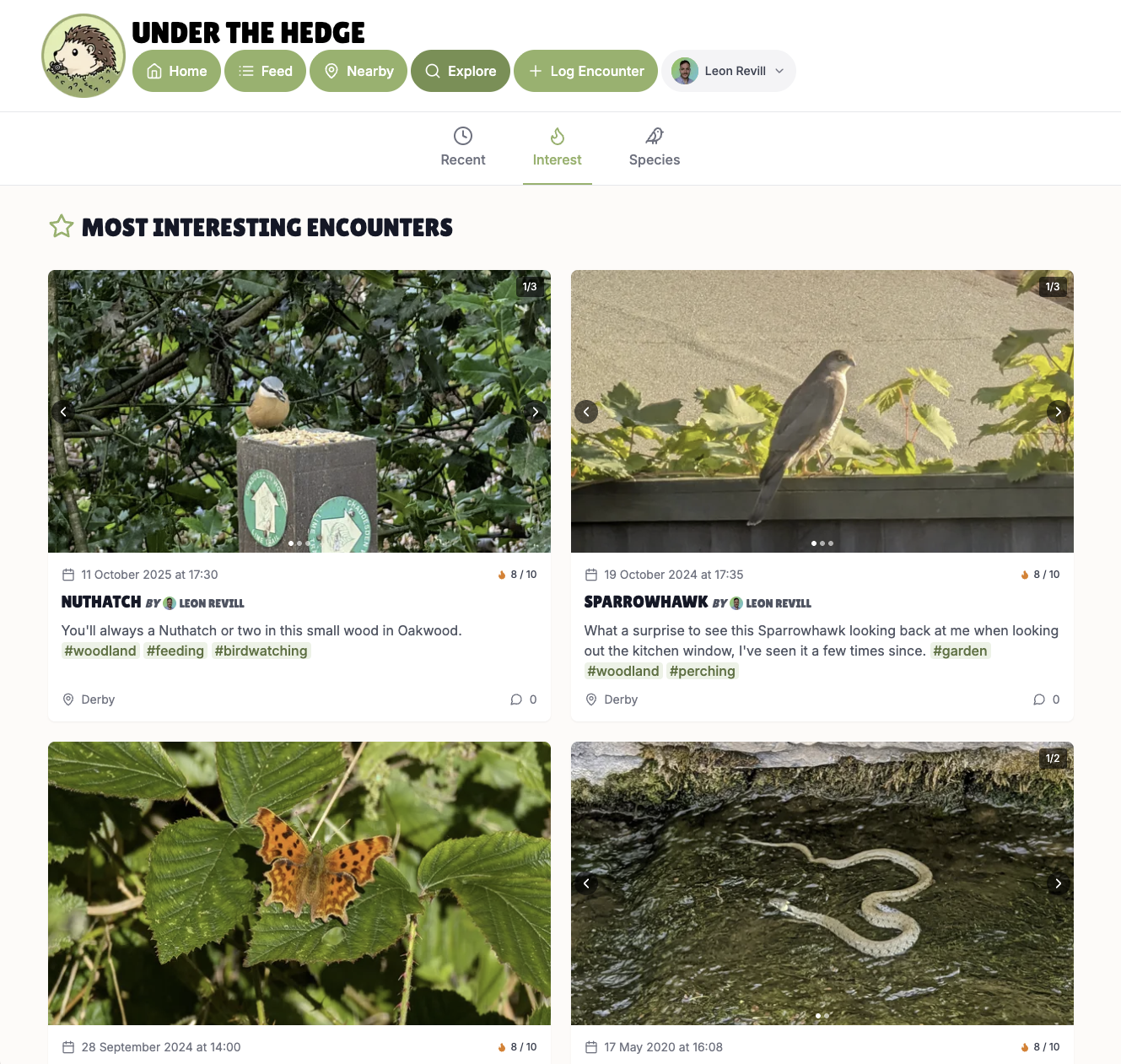

The result is Under The Hedge—a fun project blending my passion for technology and wildlife.

The experiment yielded several key findings, validating and adding practical context to the broader industry trends:

I set out to build a community platform for sharing and discovering wildlife encounters—essentially an Instagram/Strava for wildlife.

To give you a sense of the project's scale, it includes:

You can check it out here: https://www.underthehedge.com

Before I share what I found while developing Under The Hedge, we should assess what the rest of the industry is saying based on the studies from the last couple of years.

As we come to the end of 2025, the narrative surrounding AI-assisted development has evolved from simple "speed" to a more nuanced reality. The 2025 DORA (DevOps Research and Assessment) report defines this era with a single powerful concept: AI is an amplifier. It does not automatically fix broken processes; rather, it magnifies the existing strengths of high-performing teams and the dysfunctions of struggling ones.

The 2025 data reveals a critical shift from previous years. In 2024, early data suggested AI might actually slow down delivery. However, the 2025 DORA report confirms that teams have adapted: AI adoption is now positively correlated with increased delivery throughput. We are finally shipping faster.

But this speed comes with a "Stability Tax." The report confirms that as AI adoption increases, delivery stability continues to decline. The friction of code generation has been reduced to near-zero, creating a surge in code volume that is overwhelming downstream testing and review processes.

This instability is corroborated by external studies. Research by Uplevel in 2024 found that while developers feel more productive, the bug rate spiked by 41% in AI-assisted pull requests. This aligns with the "vibe coding" phenomenon—generating code via natural language prompts without a deep understanding of the underlying syntax. The code looks right, but often contains subtle logic errors that pass initial review.

Despite 90% of developers now using AI tools, a significant "Trust Paradox" remains. The 2025 DORA report highlights that 30% of professionals still have little to no trust in the code AI generates.

We are using the tools, but we are wary of them—treating the AI like a "junior intern" that requires constant supervision.

The Death of "DRY" (Don't Repeat Yourself) The most damning evidence regarding code quality comes from GitClear’s 2025 AI Copilot Code Quality report. Analyzing 211 million lines of code, they identified a "dubious milestone" in 2024: for the first time on record, the volume of "Copy/Pasted" lines (12.3%) exceeded "Moved" or refactored lines (9.5%).

The report details an 8-fold increase in duplicated code blocks and a sharp rise in "churn", code that is written and then revised or deleted within two weeks. This indicates that AI is fueling a "write-only" culture where developers find it easier to generate new, repetitive blocks of code rather than refactoring existing logic to be modular. We are building faster, but we are building "bloated" codebases that will be significantly harder to maintain in the long run.

Finally, security remains a major hurdle. Veracode’s 2025 analysis found that 45% of AI-generated code samples contained insecure vulnerabilities, with languages like Java seeing security pass rates as low as 29%.

The data paints a clear picture: AI acts as a multiplier. It amplifies velocity, but if not managed correctly, it also amplifies bugs, technical debt, and security flaws.

My chosen tools were Gemini for architecture/planning and Cursor for implementation. In Cursor I used agent mode with the model set to auto.

Building Under The Hedge was an eye-opening exercise that both confirmed the industry findings and highlighted the practical, human element of AI-assisted development.

While I didn't keep strict time logs, I estimate I could implement this entire system—a reasonably complex, enterprise-scale platform—in less than a month of full-time work (roughly 9-5, 5 days a week). This throughput aligns perfectly with the DORA report's finding that AI adoption is positively correlated with increased delivery throughput.

The greatest personal impact for me, which speaks perhaps more about motivation than pure speed, was the constant feedback loop. In past personal projects, I often got bogged down in small, intricate details, leading to burnout. Using these tools, I could implement complete, complex functionality—such as an entire social feed system—in the time it took to run my son’s bath. The rapid progress and immediate results are powerful endorphin hits, keeping motivation high.

My experience also validated the industry's growing concerns about the "Stability Tax"—the decline in delivery stability due to increased code volume. I found that AI does well-defined, isolated tasks exceptionally well; building complex map components or sophisticated media UIs was done in seconds, tasks that would typically take me days or even weeks. However, this speed often came at the expense of quality:

Ultimately, I had to maintain a deep understanding of the systems to ensure best practices were implemented, confirming the "Trust Paradox" where developers treat the AI like a junior intern requiring constant supervision.

The security risks highlighted by Veracode were also apparent. The AI rarely prioritized security by default; I had to specifically prompt it to consider and implement security improvements.

Furthermore, the AI is only as good as the data it has access to. When I attempted to integrate the very new Cognito Hosted UI, the model struggled significantly, getting stuck in repetitive loops due to a lack of current training data. This forced me to step back and learn the new implementation details myself. Once I understood how the components were supposed to fit together, I could guide the AI to the correct solution quickly, highlighting that a deep conceptual understanding is still paramount.

Despite its flaws, AI proved to be a magnificent tool for learning. As a newcomer to Next.js and AWS Amplify, the ability to get working prototypes quickly kept me motivated. When I encountered functionality I didn't understand, I used the AI as a coach, asking it to explain the concepts. I then cross-referenced the generated code with official documentation to ensure adherence to best practices. By actively seeking to understand and then guiding the AI towards better solutions, I was able to accelerate my learning significantly.

To mitigate the "Stability Tax" and maximize the AI's velocity, a proactive, disciplined approach is essential:

So, should we stop using AI for software development?

Absolutely not. To retreat from AI now would be to ignore the greatest leverage point for engineering productivity we have seen in decades. Building Under The Hedge proved to me that a single developer, armed with these tools, can punch well above their weight class, delivering enterprise-grade architecture in a fraction of the time.

However, the era of blind optimism must end. The "Sugar Rush" of easy code generation is over, and the "Stability Tax" is coming due.

The data and my own experience converge on a single, inescapable truth: AI lowers the barrier to entry, but it raises the bar for mastery.

Because AI defaults to bloating codebases and introducing subtle insecurities, the human developer is more critical than ever. Paradoxically, as the AI handles more of the syntax, our value shifts entirely to semantics, architecture, and quality control. We are transitioning from being bricklayers to being site foremen.

If we treat AI as a magic wand that absolves us of needing to understand the underlying technology, we will drown in a sea of technical debt, "dubious" copy-paste patterns, and security vulnerabilities. But, if we treat AI as a tireless, brilliant, yet occasionally reckless junior intern—one that requires strict specifications, constant code review, and architectural guidance—we can achieve incredible things.

The path forward isn't to stop using the tools. It is to stop "vibe coding" and start engineering again. We must use AI not just to write code, but to challenge it, test it, and refine it.

The future belongs to those who can tame the velocity. I only wish my experiment resulted in building something that would make me loads of money instead of just tracking pigeons! 😂